Using Weave to create a Docker container network

In the Docker networking model, every container belongs to the same private network managed by the Docker daemon. Containers are not visible to the outside world and you need to configure port mappings and/or links.

To run a cluster across VMs with this model, we would need to expose ports necessary for containers in order to communicate with the outside world. With docker this would be using docker run -p <container-port>:<host-port> extensively for each service we want to expose.

Still, in the case of a software like Scalaris, a distributed key/value store: You can run many nodes on one host/container. Keeping track of each exposed port would thus be a nightmare as you need to manually micro manage a given range of ports for all the containers (and the nodes inside them) running Scalaris. Shut down or create instances implies to always update which port is used to allow nodes to use them consistently without colliding with each other.

As always, you are adding your own layer of complexity on top of an already complex architectural model.

The main issue about using containers is to change our way to work by using links and exposing ports the way we are told to. This is far from being intuitive, a lot of newcomers to Docker are struggling with the complex networking model. Sharing a common topology allowing us to manage our application instances the same way we do it on bare-metal machines is essential for simplicity, maintenance and consistency at large scale.

For this, a project called Weave comes to the rescue.

Weave creates a Virtual network that connects our Docker containers together. No need to link our containers and micro manage our ports: here, containers can see each other if they are on the same Weave (Virtual) network.

Promises are great, but let's see it in action.

For this, we will focus on trying to run Scalaris nodes in Docker containers. Containers are located on different hosts over the network. It could be different VMs across a single machine, across different datacenter regions but even across entirely different Cloud providers.

First, what the hell is Scalaris?

Scalaris is a good candidate to test Weave as it is a Peer to Peer network with a Chord overlay (to simplify).

You probably never heard about Scalaris before. This might be because of its Academic roots. It originated from the Zuse Institute in Berlin and is still actively maintained.

To give you a small sneak peek: Scalaris is a Peer to Peer in-memory Key-Value Store. It is written in Erlang and has many bindings from Java to Python. The network overlay is composed of a Chord ring, a well known topology in Peer to Peer software. It uses a slightly modified version of Paxos for leader election. And obviously you can store your Key/Value pairs which are replicated through the ring on a given number of peers. It supports (N/2)-1 failures. Compared to other key/value stores, Scalaris provides strong data consistency and support for transactions.

Scalaris is great in the sense that it just works. You create your ring of peers and you can feed the cluster with more nodes to cope with the demand very easily. No extensive administration tasks needed (unless you use containers!).

A feature often critiziced by the community is that in Scalaris, the data is not persisted. Everything is in memory. If your network goes down you are left with.. nothing. But this is by design, a Scalaris cluster is not meant to go down. It should always run.

Well known equivalents are Riak (Peer to Peer but document-oriented) and etcd.

How this could be used

This could be used to manage a cluster of docker containers by managing service discovery or handling cluster metadata management. Typically I wanted to evaluate other mature Key/Value stores similar to etcd or Consul, which are used in many Cloud Platform experiments based on Docker.

Back to our Task

We are going to run a Scalaris Cluster within Docker Containers disseminated over staticically IP provisionned VMs for local experimentation.

What is our roadmap for this task?

- Provision 3 Virtual Machines with Docker and Weave installed using Vagrant

- Create 3 containers running Scalaris, one on each host

- Initialize a Weave network on each host

- Create containers running a single Scalaris node using Weave

- Pray to the old gods and the new

- Finally, test our Scalaris Cluster by accessing the web dashboard and storing a few key/value pairs.

Prerequisites

For this task you'll need to install on your machine:

- Git

- Vagrant

- Virtualbox

And that's it!

Cloning the repository

Create a test folder and clone the git repository:

git clone https://github.com/abronan/scalaris-weave

cd scalaris-weave

Creating our Virtual Machines using Vagrant

Nothing special here. VMs are provisionned from an Ubuntu Trusty image. We install dependencies (Docker and Weave) via a script.

Let's start our hosts using:

vagrant up

Open three terminals and ssh into these hosts:

vagrant ssh host0

vagrant ssh host1

vagrant ssh host2

Create the Scalaris Docker image

You can jump straight to the next part if you are not interested in the Docker image creation process.

On each virtual machine, we are going to use the image abronan/scalaris-weave.

The Dockerfile for this image is below:

# Scalaris Weave

# v0.1.0

FROM ubuntu:trusty

MAINTAINER Alexandre Beslic

# Install and setup project dependencies

RUN apt-get update && apt-get install -y erlang wget curl conntrack lsb-release supervisor openssh-server

RUN mkdir -p /var/run/sshd

RUN mkdir -p /var/log/supervisor

RUN locale-gen en_US en_US.UTF-8

COPY supervisord.conf /etc/supervisor/conf.d/supervisord.conf

RUN echo 'root:scalaris' | chpasswd

# Install and setup Scalaris

RUN echo "deb http://download.opensuse.org/repositories/home:/scalaris/xUbuntu_14.04/ ./" >> /etc/apt/sources.list

RUN wget -q http://download.opensuse.org/repositories/home:/scalaris/openSUSE_Factory/repodata/repomd.xml.key -O - | sudo apt-key add -

RUN apt-get update && apt-get install -y scalaris

ADD ./scalaris-run.sh /bin/scalaris-run.sh

RUN chmod +x /bin/scalaris-run.sh

EXPOSE 22

CMD ["/usr/bin/supervisord"]

It is quite dense for a Dockerfile (not optimized) but it does nothing too fancy:

- We install dependencies including erlang, wget, ssh and supervisor (that will manage the ssh and scalaris process in the background).

- We make some useful configuration, install scalaris, expose the ssh port and finally run the supervisor daemon.

So far so good.

The Supervisor configuration file is below:

[supervisord]

nodaemon=true

[program:sshd]

command=/usr/sbin/sshd -D

stdout_logfile=/var/log/supervisor/%(program_name)s.log

stderr_logfile=/var/log/supervisor/%(program_name)s.log

autorestart=true

[program:scalaris]

command=bash -c ". /bin/scalaris-run.sh && erl -name client@127.0.0.1 -hidden -setcookie 'chocolate chip cookie'"

pidfile=/var/run/scalaris-monitor_node1.pid

stdout_logfile=/var/log/supervisor/%(program_name)s.log

stderr_logfile=/var/log/supervisor/%(program_name)s.log

It includes informations about the processes we need to supervise, here sshd and scalaris. However, instead of launching scalaris directly, we use a script called scalaris-run.sh (alternatively we launch an Erlang prompt on localhost as a foreground process)

The container is parameterized by setting environment variables to docker run at launch. The script launched for the CMD directive is the one below:

#!/bin/bash

if [ -z "$JOIN_IP" ]; then

echo "error - you must set the address to join"

exit 1

fi

if [ -z "$LISTEN_ADDRESS" ]; then

echo "error - you must set the listen address"

exit 1

fi

# Replace points by commas on IP addresses

#

JOIN_IP_COMMA=`echo $JOIN_IP | tr '.' ','`

LISTEN_ADDRESS_COMMA=`echo $LISTEN_ADDRESS | tr '.' ','`

# Fill in the minimal configuration for Scalaris

#

echo "

{listen_ip , {$LISTEN_ADDRESS_COMMA}}.

{mgmt_server, {{$JOIN_IP_COMMA},14195,mgmt_server}}.

{known_hosts, [{{$JOIN_IP_COMMA},14195, service_per_vm}

]}.

" >> /etc/scalaris/scalaris.local.cfg

# Debug print the config file

#

cat /etc/scalaris/scalaris.local.cfg

# Generate random uuid

#

export UUID=$(cat /proc/sys/kernel/random/uuid)

# Manage Scalaris ring

#

if [ "$JOIN_IP" == "$LISTEN_ADDRESS" ]; then

# We are the first node, we create the ring

scalarisctl -m -d -n $UUID@$LISTEN_ADDRESS -p 14195 -y 8000 -s -f start

else

# Join the existing ring

scalarisctl -d -n $UUID@$LISTEN_ADDRESS -p 14195 -y 8000 -s start

fi

Point by point:

- It checks if environment variables namely

JOIN_IPandLISTEN_ADDRESSare set. If not, it exits - We traduce IP from environment variables to use commas instead. This is because the Scalaris configuration file

/etc/scalaris/scalaris.local.cfguses IP of the form127,0,0,1. - We generate a sample minimal configuration with the listening address as well as the address of the first node. If we are the first node, then all IPs are the same.

- If we are the initiator, then we run

scalarisctlwith the proper flags (namely-d-mand-f). If not we runscalarisctlto join an existing network.

Pulling the Scalaris image

Now that we explained the image creation process, let's pull the Scalaris image for our experiment. On each host, type:

sudo docker pull abronan/scalaris-weave

The image is heavy and this might take some time. Go grab a coffee in the meantime.

Running the Weave network

Now that we have prepared everything, let's run our Scalaris cluster for good. We need to initialize the Weave network on each host.

On host0, type:

sudo weave launch 10.2.0.1/16 192.168.42.101 192.168.42.102

Note: CIDR is now optional. See: https://github.com/zettio/weave/pull/154

On host1:

sudo weave launch 10.2.0.1/16 192.168.42.100 192.168.42.102

Finally on host2:

sudo weave launch 10.2.0.1/16 192.168.42.100 192.168.42.101

With these command lines, we tell each host to start a Weave router. The router runs in its own container (zettio/weave) and is on the 10.2.0.0/16 address space.

After having launched the weave network on the same address space on host0, host1 and host2, this is time to run our Scalaris containers. We give to each container an address on a subnet of the Weave network (in this example we use 10.2.1.0/24).

On host0 (the first node), type:

sudo weave run 10.2.1.3/24 -d --name first -p 8000:8000 -e JOIN_IP=10.2.1.3 -e LISTEN_ADDRESS=10.2.1.3 abronan/scalaris-weave

On host1:

sudo weave run 10.2.1.4/24 -d --name second -p 8000:8000 -e JOIN_IP=10.2.1.3 -e LISTEN_ADDRESS=10.2.1.4 abronan/scalaris-weave

Finally, on host2:

sudo weave run 10.2.1.5/24 -d --name third -p 8000:8000 -e JOIN_IP=10.2.1.3 -e LISTEN_ADDRESS=10.2.1.5 abronan/scalaris-weave

You might be surprised that we create our containers with the command weave run and not docker run. This is because weave invokes docker run itself (I put the -d flag for convenience and understanding but weave actually calls docker run -d).

Note that we still map the port 8000 to the one of the host, using the -p flag. Even though our cluster runs in its own network, we still need to map the port of the web interface to be accessible from the host for our local experiment.

And really, that's it. Nothing more than two simple commands on our hosts and the virtual network is set for the our containers. Theoretically, they can now communicate with each other and they should be able to build the Chord ring. Let's check that.

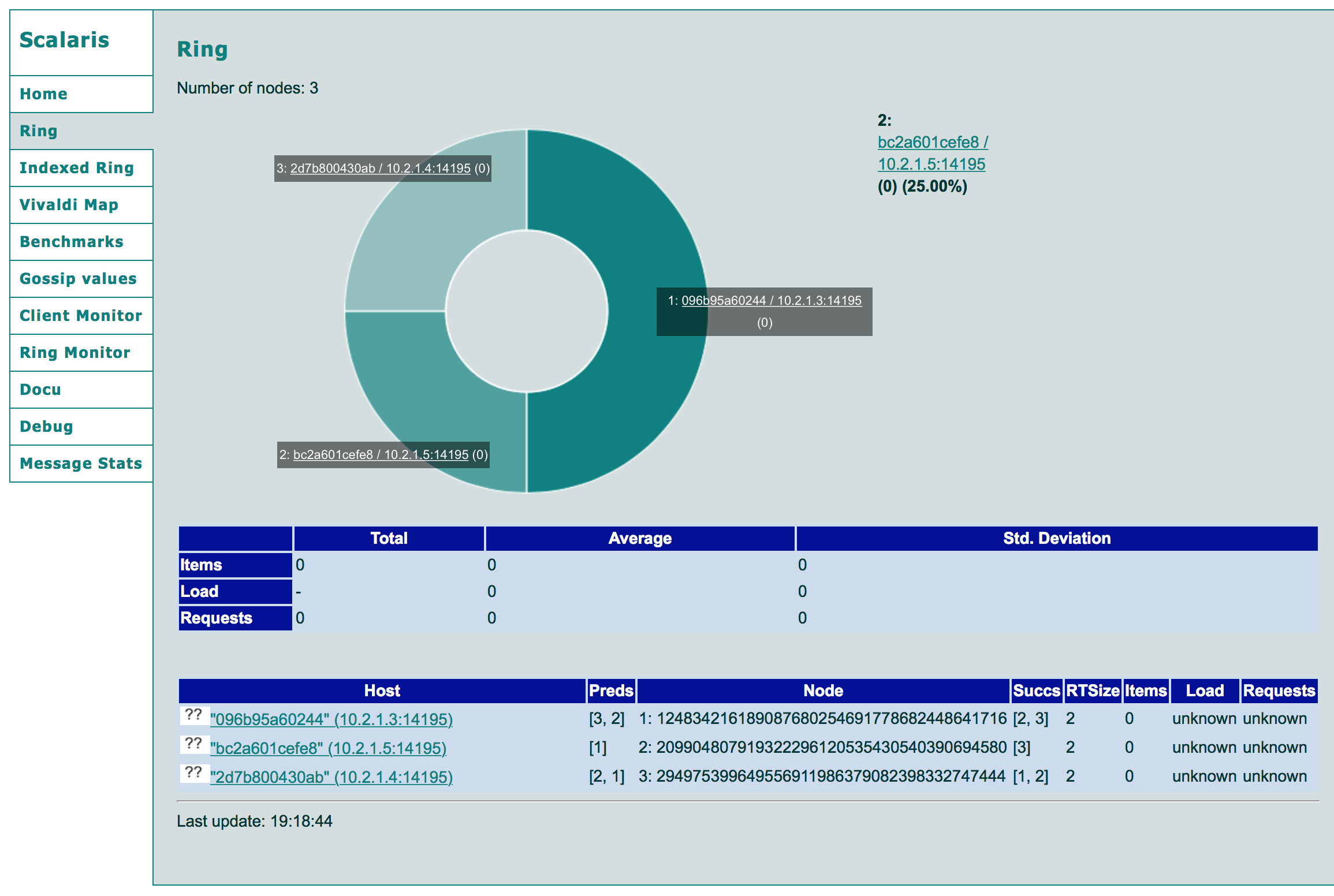

Accessing the Scalaris Dashboard

We can now access the Scalaris Dashboard to monitor the state of the cluster through those addresses:

Normally, you might see the dashboard below:

You can test the cluster by adding some Key/Value pairs. It works!

Conclusion

Weave allows us to use application containers in a distributed fashion accross machines and networks, managing them as if they were bare-metal hosts. I choosed Scalaris but you can run anything with Weave: Riak, Cassandra or whatever. Weave blog categorizes use cases among which one is dedicated to Cassandra (This article gave me the curiosity to test it with Scalaris, go read it!).

No doubt that this is a huge step forward for the Docker community. While Docker is a great building block for the Cloud, the community brings many new tools. This makes Docker even more appealing for industrial use cases.

Weave is one of those tools which makes our life easier. It brings the flexibility needed to create consistent architectures by avoiding Docker's convoluted networking model.

The near future sounds very good for Docker: We'll see it coupled to Weave, Flocker (Management of Data containers) or even Criu (Live migration) in order to leverage fully-resilient architectures with minimal administration overhead.

abronan Newsletter

Join the newsletter to receive the latest updates in your inbox.